School of Chemistry, Faculty of Science, University of Bristol

Dr Chris Adams

Peerwise is an online tool developed and hosted by the University of Auckland. It allows students to author and answer multiple-choice questions (MCQs) and to assess their quality and comment upon them.

A lecture was delivered to all first year students on the subject of multiple choice questions. This started off by getting the students to do the famous ‘Grunge Prowkers’ content-free quiz to illustrate the basic points of MCQ design. It then moved on to discuss MCQs in the context of Bloom’s Taxonomy, illustrating that students typically prepare for MCQ exams by completing MCQs from past exams, whereas they would actually learn more by authoring MCQs. We then finished by discussing Peerwise itself and the work of Galloway and Burns (Chem. Educ. Res. Pract., 2015, 16, 82-92) in the Chemistry Department at Nottingham.

This was followed by a workshop. Prior to the workshop the students were asked to login to Peerwise and author at least one MCQ; the workshop activity was for them to try each other’s questions out and critique and rate them in terms of correctness and difficulty; all of this can be done within Peerwise.

The post-workshop activity was for students to author at least two more MCQs, to answer 10 more and to rate and comment on 3 more. This was scheduled so that it had to be completed before their MCQ exam.

Over the course of the activity, 153 students authored 370 questions. 6784 answers were supplied. The most active author supplied 10 questions, and the most active answerer answerer answered 362 questions (306 correctly and 56 incorrectly). This person only authored 3 questions.

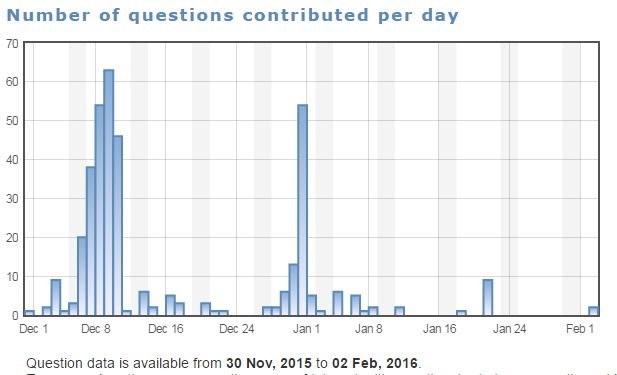

The graph below shows the number of questions authored by the students per day. The workshops were held w/c Dec 7th, and the deadline for them to achieve credit for question writing was January 1st.

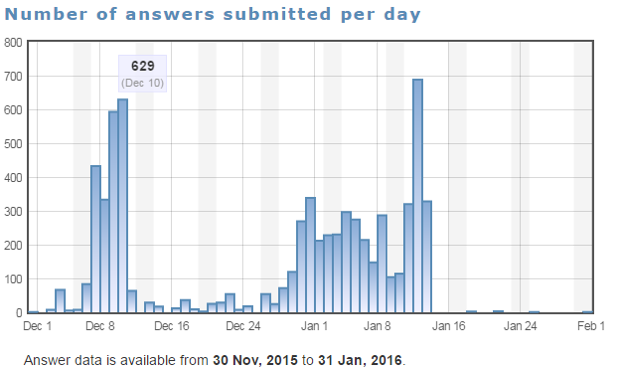

The next graph shows the number of questions answered per day. Again, the deadline for credit was January 1st, but the exam was on January 15th, indicating that many students were using it as a revision tool.

The questions are rated by the participants in terms of quality. Instructor examination of the top-rated questions reveals that many of them are of good quality, so as a bonus, we now have a question bank containing some student-authored MCQ questions which can be used for future exams.

The whole exercise was well received by students. Peerwise is very easy to use, and thoroughly recommended.

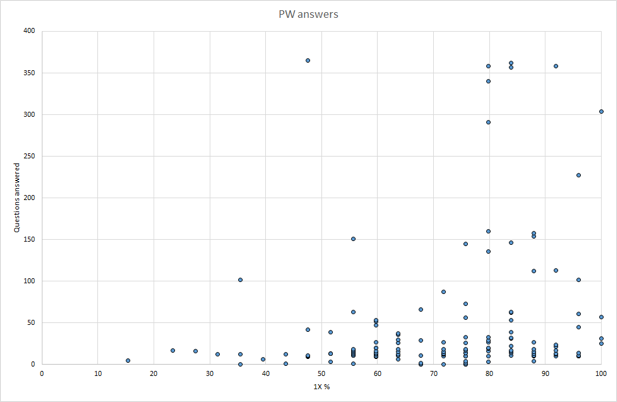

50% of the class overall achieved more than 70% (97 out of 194) (the exam was easy!) However, of the students that answered more than 50 peerwise questions, 26 out of 32 achieved this level (81%) (no causation is claimed!) (Figure 3).

Figure 3: Correlation of MCQ exam mark (horizontal axis) with number of peerwise questions answered (vertical)

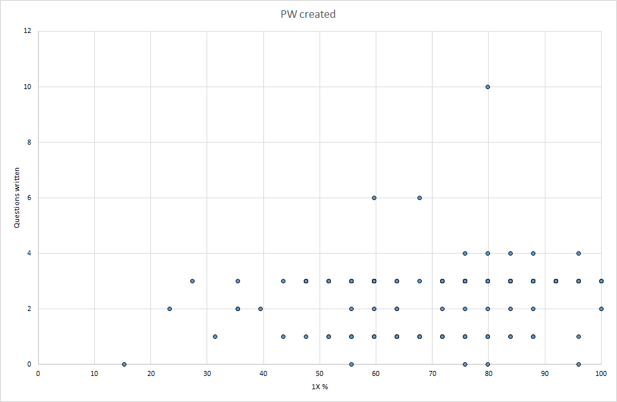

Overall, however, engagement with the activity was disappointing, and many students only did the minimum required for credit. Only 8 students authored more than the minimum 3 questions (Figure 4). One student answered 340 questions and only wrote 1.

Figure 4: Correlation of MCQ exam mark (horizontal axis) with number of peerwise questions created (vertical)

The plan is to repeat the activity next year, but to try and increase student engagement by emphasising the ‘gamification’ aspects of the activity. Participants can gain badges and a ‘reputational rating’ by answering, authoring and rating questions, and by introducing a small element of competition it is hoped to increase participation.