Making sense of uncertainty in complex systems

Using statistical analysis to address the uncertainty inherent in complex systems, such as climate change models, helps bring scientific evidence into policy-making.

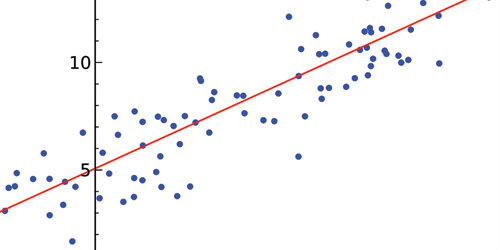

Scientists who study complex systems use models to bridge the gap between the measurements and observations that they are able to make and the system they are trying to explain.

Professor Jonathan Rougier is a statistician who specialises in assessing the uncertainty inherent in complex systems - systems that are typical in environmental science.

To understand how climate has changed in the last 10,000 years, for example, a scientist might use pollen fragments collected from lake sediment cores to identify which tree species were present 10,000 years ago.

The information would then be processed through a model that represents the relationship between tree species and climate properties such as temperature and precipitation.

This then allows the scientist to make inferences about past climate based on observations from some ancient pollen fragments.

There can be considerable uncertainty associated with these models and so, Rougier explains, a statistician becomes particularly important to account for the systematic way in which the model is an imperfect representation of the system being studied.

Bringing scientific evidence into policy-making

Models are powerful tools for describing how complex systems work and can offer valuable insights, so long as their limitations are understood and respected.

However, as science has been harnessed to the needs of policy, scientists have had to venture beyond using models as explanatory tools and start using them as predictive tools.

This is where understanding and accounting for uncertainty can be particularly important as it puts scientific predictions into context of how little we truly know about the future.

“Right now, it’s hard to get people to 'fess up' about how uncertain their model-based predictions really are. This is partly because a glaciologist, for example, can provide detailed judgements about the physics behind how an ice sheet might respond to ocean warming, but it is a statistician who has the tools to assess and represent the uncertainties associated with that.”

Professor Jonathan Rougier

On the impact pipeline between policy-makers and scientists, Rougier admits that he is placed on the end closest to the scientists. He is much more interested in working directly with the scientists themselves, behind the scenes to improve models of complex systems.

From climate to natural hazard prediction, Rougier works with systems which defy the ability to be modelled with any accuracy, yet have significant policy relevance for society: “This is what ultimately interests me, the role of science in bringing evidence into policy-making”.

Addressing uncertainty in climate models

Rougier’s work has already had direct impact. He has worked with the Met Office Hadley Centre, one of the world’s leading climate change research centres, for about ten years, and with other climate groups in Europe and the US.

Rougier has helped recognise and address the sources of uncertainty that are inherent in climate models.

He points to ‘parameter uncertainty’ in complex code as one of the key things to incorporate into uncertainty assessments around predictive models. He explains that there may be hundreds of parameters in a big climate simulator and many that remain unknown.

They could be abstract values standing in for physics that isn't well understood, or that is too expensive to programme and process to include. However, as the the simulators must have values in order to run, they are tested to see whether or not the model gives realistic answers.

The other main source of uncertainty in climate models is structural uncertainty, which exists because the model is an imperfect representation of the system being studied.

Computational constraints, among other things, limit the ability for climate models to capture all of the complex interactions that occur between biological, chemical, and physical processes at the global scale. This means that it would be unrealistic to suppose that any setting of the parameters could be `correct’.

Accounting for some sources of uncertainty requires highly sophisticated statistics, however, Rougier says there are other ways in which small improvements in practice can have a profound impact on the outcome.

For example, some statisticians feel that experimental design is both the most useful and the most neglected aspect of applied science. There is a now a large statistical literature on Computer Experiments (experiments run on a computer simulator in lieu of the system itself).

A small amount of additional time spent in design can pay huge dividends in reducing the number of experimental runs required, and in simplifying the resulting analysis.

Rougier has proposed a framework for assimilating the different sources of uncertainty inherent in climate science. The Met Office applied this framework in their contribution of probabilistic predictions of future climate as part of the most recent UK climate impacts assessment.

Related researchers

Related research centres

Related publications

Study Probability and Statistics

Study Probability and Statistics

Develop practical skills while working on challenges in real-world applications.